Introduction

The O2MC I/O Platform is a managed event-processing application to perform end-to-end real-time processing on streaming data with compliancy and Privacy by design in mind. The data can come from devices, sensors, file servers, websites, databases, social media, third party API's, HR applications and more. All O2MC I/O Platform applications are configured through the files written in the patented programming language DimML (Data in Motion Machine Language).

The O2MC I/O Platform application is used for compliant data logistics by getting data from any digital source, performing specific processing for specific data tuples and distributing data to one ore more end points. Communication with these applications is bidirectional so the O2MC I/O Platform application can also be used to push messages back to the original source asynchronously.

Some examples:

- Transaction monitoring and reporting

- Product recommendation

- Fraud detection and alerting

- Cross channel content personalisation

- Web clickstream analytics

You can use these docs as you see fit. Check out our Getting started guide, or explore our case-specific tutorials in order to get a flying start. Browse the reference guide if you're looking for specific syntax or settings. If any other questions arise, please contact us at support@o2mc.io.

How does the O2MC I/O Platform application work?

The architecture provides insight in several components of the O2MC I/O platform as well as the integration with external applications. Below is a description of each of the layers in the architecture and its components. The O2MC I/O Platform components can be used from the current cloud platform but they can also be installed on premise. Details on the installation of the application can be found in the chapter Setting up. Since the events are generated at the left, in the source layer, that layer will be described first before moving to right.

Sources

The O2MC I/O Platform application can capture data from any first, second or third party source. The only prerequisite is that the source is connected to the internet. This includes IoT devices and sensors, files, websites, databases, social media, third Party API's and many more. Since the architecture is aimed at real time processing of the data, the sources provides the data the moment an interaction takes place.

Connectivity layer

The connectivity layer connects all different data sources and types to the O2MC I/O Platform application for processing. For different sources, the O2MC I/O Platform application provides several specific connector types. By default, a Rest API can be created to receive data from any source. Additionally, for websites a Single Line of Code is available to connect the website to the O2MC I/O Platform application. The SLoC consists of 1 Javascript code snippet which is the same for each page and also each website. It allows bidirectional communication and after installing the SLOC on a website, no additional IT involvement is necessary to build, enhance and maintain the O2MC I/O platform application for that source. For native apps specific SDKs are available as well.

Integration layer

The integration layer provides overall robustness on the processing the events. The event hub makes sure that all events are (eventually) processed, dealing with spikes in volume and data unavailability. Additionally, event processing is facilitated as well as providing the possibility of including reference data (e.g. IP location look up) in the event on the fly.

Real time processing

The in memory analytics component is the core of the O2MC I/O Platform application. More than tens of thousands of incoming events can be processed in memory in real time. Processing includes but is not limited to ETL, sessionization, cross device user identification, predictive machine learning and other Big Data applications. The results can be distributed to many different end point with different data sets, granularity and intervals. All of this can be defined in DimML files either using existing DimML language elements or code snippets in several different languages.

The O2MC I/O Platform application has been designed with Privacy by design in mind. As a result data governance is at the core of the O2MC I/O platform and always included in any application. To illustrate this events will not be processed if the owner of the data has not given permission to do so. Additionally, the platform facilitates an extensive multi-tenant environment with extensive authentication and application isolation.

Data storage

Apart from real time integrations with third applications, the O2MC I/O Platform application can provide structured data to databases like MySQL, Oracle and MongoDB as well as provide unstructured data to log files on many other data stores. The query and connection details can be fully customized and the data stream can be throttled to prevent spikes from causing problems in the data sources. The O2MC I/O Platform application is also able to queue output data if the destination source is temporarily unavailable. The O2MC I/O Platform application does not store data so all data is located outside the platform at any location that is desired.

Installation

General usage

Project creation

To create an example DimML implementation, follow these steps:

– In the Project Explorer tab, do a right mouse click – New – Project…

– In the New Project dialog, select the General – Project wizard and click Next >

– Name the project HelloWorld and click Finish to create the project

The created HelloWorld project should appear in the Project Explorer. To proceed with the example

DimML implementation, follow these steps:

– On the HelloWorld project, do a right mouse click – New – File

– In the New File dialog, enter www.o2mc.io.dimml as File name and click Finish

– Choose Yes when asked to convert HelloWorld to an Xtext project

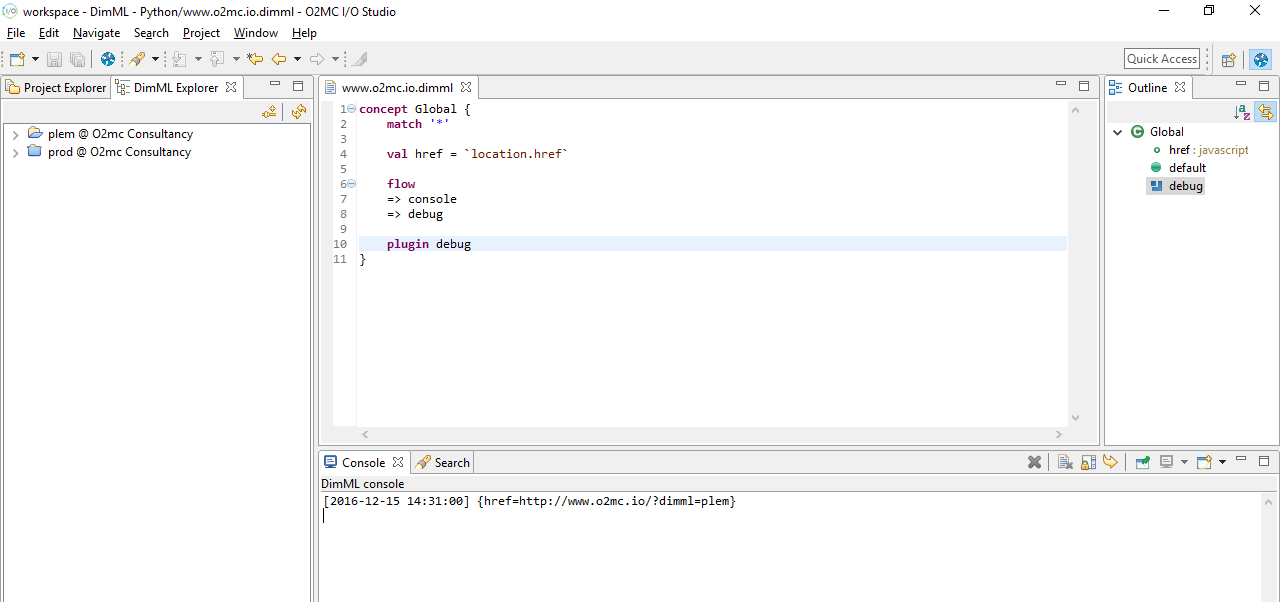

The created www.o2mc.io.dimml file should appear in the Project Explorer and be automatically opened in the editor. Copy and paste the code to the right into the file and save it:

concept Global {

match '*'

val href = </span><span class="n">location</span><span class="o">.</span><span class="na">href</span><span class="err">

flow

=> console

=> debug

plugin debug

}

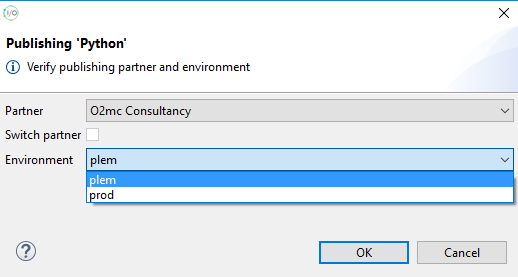

Publishing

To publish this example project to a DimML development environment, make sure the www.o2mc.io.dimml is opened in the editor and follow these steps:

- In the toolbar, click on the Publish DimML button (or use the Ctrl+Shift+D keyboard shortcut)

- In the Publish DimML confirmation dialog, select the desired Partner and click OK

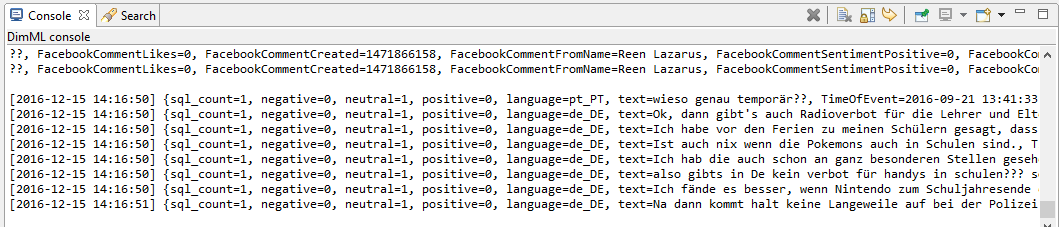

In the Console at the bottom of the window a DimML console should appear if publishing was successful. This is where the data flow information (at the point where => console is located in the DimML file) will appear when the published file is executed.

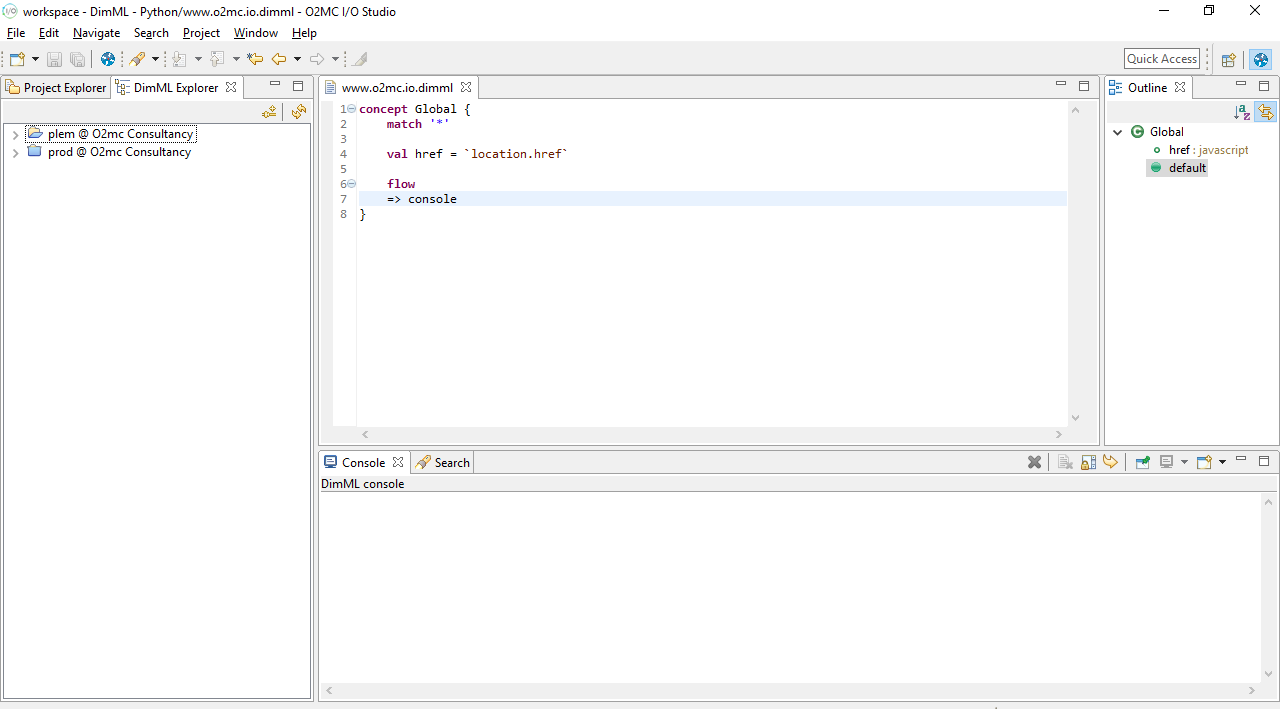

To verify that the publishing was succesful, follow these steps:

– Open the DimML Explorer

– In the top right corner of the DimML Explorer, click on Reload to update the view

– In the DimML Explorer, go to ${user} @${userGroup} – www.o2mc.io.dimml

– Double click on www.o2mc.io.dimml to open the content in an editor tab

The file should be present and the content should be equal to the code snippet copied earlier if the publishing was succesful.

Execution

To execute the example DimML file, follow these steps:

– For any website that does not have the Single Line of Code installed, add it automatically to the site by using the DimML Extension – In a web browser, go to http://www.o2mc.io/?dimml=${user} – Verify that in the browser console (F12 to open, Console tab) the following notice appears: DimML development mode: ${user} – In the DimML console in the O2mc Studio, verify that the following line appears: [yyyy-mm-dd hh:ii:ss] {href=http://www.o2mc.io/?dimml=${user}}

Note: Replace ${user} with the DimML user name associated with the user token entered in the DimML plugin configuration step earlier in this document.

Installing O2MC Studio

Prerequisites

This O2MC Studio installation guide describes the process of installing the O2MC Studio and provides the steps towards creating, publishing, and executing an example DimML file. Prerequisites for installing the O2MC Studio are

- The O2MC studio archive, which can be found here:

- An O2MC user token created for you giving access for a specific set of domains and user groups

- An O2MC user string linekd to the user token, herafter referred to as ${user}

Installation

To install the O2MC Studio, follow these steps:

- Move the archive file to the desired directory (hereafter referred to as

${o2mcStudioDir}) - Extract the archive file (Windows: right mouse click, Extract All…)

- Open the

${o2mcStudioDir}/o2mc-studio/directory - Execute O2mcStudio

- Choose a default workspace directory. This directory is used to store your DimML files on your device

DimML plugin configuration

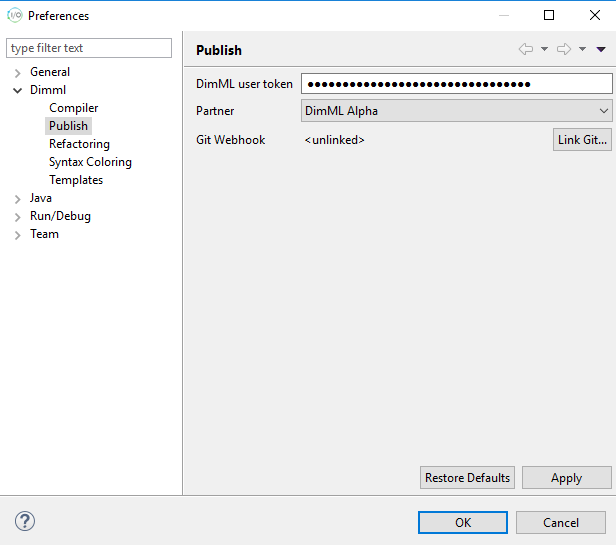

After opening the O2mc Studio, follow these steps to configure the DimML plugin:

– In the menu bar, go to Window – Preferences

– In the left part of the Preferences dialog, go to Dimml – Publish

– Enter your DimML user token in the input field

– If a valid user token is given, the Partner dropdown box is automatically populated

– Select a user group from these options and click OK to finish the configuration

DimML specific parts of the O2MC studio

The O2mc Studio provides syntax highlighting for DimML files and publishing functionality. Moreover, it comes with a default DimML perspective to make DimML development more convenient. A perspective is a specific configuration of views and their positioning within a window. The DimML perspective adds the DimML Explorer tab and enables the DimML console type in the Console view. All provided features are described in more details below.

Publishing

To publish DimML files in a project to the platform, open one of the DimML files in the project and

click on the Publish DimML button in the toolbar at the top of the window. Alternatively, you can

use the Ctrl+Shift+D shortcut.

In the window that appears you can determine to which environment the project’s DimML files will be published. The Partner dropdown lists all user groups for which publishing is allowed for the configured user token. In case you change this value and this is automatically stored as the new default for future publishing. You can uncheck the Switch partner box to indicate that this partner selection should only be used for the current publish action.

The Environment dropdown can be used to publish files to the production environment. By default, this will show the user name linked to the configured user token. Changing this dropdown to prod will publish the DimML files to the production environmnt. To proceed with publishing click the OK button. If the prod environment was selected a confirmation window will appear as an extra warning that this action will affects a live website.

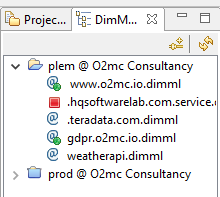

DimML Explorer

On the left side you can switch between the Project Explorer and the DimML Explorer. The DimML Explorer displays the published DimML files for the selected user group in the DimML plugin configuration. The user environment and production files are separated.

The files in the explorer tree are given icons that provide information about their platform status. Applications published to the production environment will only run for a whitelisted list of domains per partner. Reach out to your O2MC contact for adding new domains to the registered list.

In the user environment tree registered domains are indicated by adding a green light to domain files that would be allowed to run when published to production. Files without a green light should be added to the whitelist before they are actually executed after being published to production. All domain can be used in any development environment, so the registration is only necessary for production applications. In the production tree you will see that files for domains that are not whitelisted are indicated with a red light.

For service files a green arrow indicates it is active. A yellow rectangle combined with a green arrow shows that the service file does not contain any cron rules and because of that is not running. A red square indicates that the file does contain match rules but is currently not active.

DimML Console

At the bottom of the window the Console tab is shown. During DimML development this console is used to provide feedback regarding execution of DimML files for your DimML environment. Error messages and results from console flow elements are displayed here.

Just above the Console tab, on the right, is a list of icons. The Clear Console icon can be used to clear the current console output.

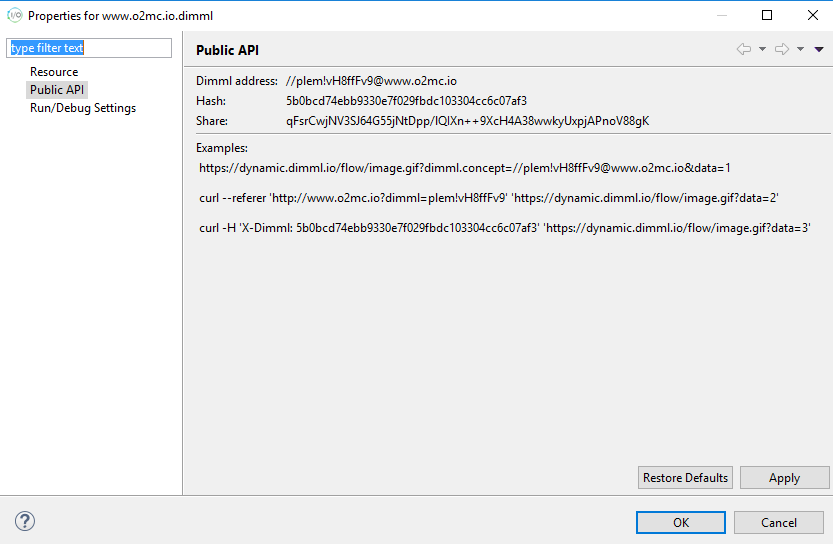

Code sharing

To share code with other developers you can generate a temporary share token that can be given to used to access your code. To obtain this share token, right click on a domain file and click Properties. In the Properties window, go to Public API to view information on how your development file can be accessed.

Besides examples of how to trigger concept execution of the selected file, at the top of the Properties window a Share token is displayed. Give this token to other developers and they will be able to temporarily access the published version of the selected file, including all imports from that file, from your development environment.

For other developers to use this share token, they can follow these steps:

– Go to the DimML Explorer tab – Click on the Connect to share icon at the top of the tab – Enter the provided share token and press OK

The share appears temporarily, until an hour after the share token creation, as an extra tree in the DimML Explorer tab and the content of the files is can be used similarly to the other trees in the DimML Explorer.

Deploying the platform

Overview

The process involves the following steps:

- Install O2MC platform application in Azure Marketplace

- Register company to obtain a product key

- Activate platform to obtain a user token

- Download and configure O2MC Studio

- Publish first DimML file and test platform

Every step is described in more detail below.

1. Install O2MC platform application in Azure Marketplace

In the Azure portal, perform the following steps to install the O2MC platform application:

- Click on

Create a resourcein the left toolbar. - Search for

O2MCin the Marketplace search bar. - Choose O2MC platform application to proceed.

- After reading the presented application description, click

Create. - Configure your instance like you normally would in Azure.

- Launch the O2MC platform application.

- When all resources are deployed, write down the assigned public IP address.

2. Register company to obtain a product key

A product key is required to activate the O2MC platform application. This product key can be obtained by registering the platform installation on the Product key registration page. This registration step does not depend on the actual platform installation. After filling out the registration form, a product key is provided. Make sure you properly store this key and have it available when you reach the platform activation step.

3. Activate platform to obtain a user token

After registration to obtain a product key (Step 1), and installation to create a running platform (Step 2), the next step is to activate the platform. The first step is to properly configure DNS, which is a required step to retrieve a valid SSL certificate (automatically using Let's Encrypt) for the configured O2MC platform application domain.

- Configure the DNS settings for the configured domain to point to public IP address written down in Step 1 after deployment of the O2MC platform application.

- When the updated DNS settings are effective, navigate to the configured domain.

- Enter the product key obtained in Step 1.

- After submitting the form, the product key is verified and an SSL certificate is requested for the domain.

- Securely store the provided user token.

4. Download and configure O2MC Studio

With an running, activated platform, the next step is to install the O2MC Studio. This assists in writing DimML files and allows publication of the files to the O2MC platform application installation in Azure.

- Download the O2MC Studio and follow the installation steps.

- During plugin configuration, enter the configured domain as API domain.

- Enter the user token obtained in Step 3 as DimML user token.

- Choose Apply and Close to finish plugin configuration.

Getting started

Hello world

helloworld.service.dimml

concept HelloWorld {

match `once`@service

flow

=> code[message = `'Hello world!'`]

=> console

}

Notice how we named our file helloworld.service.dimml in the example to the right. The 'service' part indicates to dimml that we'd like to start a long-running process, which might be triggered by external or internal signals.

We start off by supplying our concept with a match-rule, which will be interpreted by @service. The matching string preceding @service can be either once, keepalive or a cronjob style string. A Cron string will execute the concept according to your specified schema. keepalive will execute it once when you upload the concept, and every 5 minutes after that. once will only execute it when you upload it. This is useful in our case, as we only want to display 'Hello world!' once, for demonstration purposes.

We then tell dimml we'd like to run a concept which has a flow of data in it. These flows can have a name, which we can supply by including it behind our definition, in between parens. If we don't include anything, then the flow is implicitly named 'default'. So flow => console is the same as flow (default) => console.

The next part, the code flow, creates a variable with the name 'message'. This variable gets assigned the output of the codeblock (the part in between the backticks `) which enclose the code. Our groovy code then runs on the server and, in true groovy fashion, if we don't explicitly return anything, the last value operated on, gets returned. In this case it's the string 'Hello world!'

The next element in the flow is [console](#console). When you insert a [console](#console) flow, dimml will try to output the current variables, along with the values they contain, to your development console in o2mcstudio.

In our case it will echo back:

[2017-10-17 13:37:00] {message=Hello world!}

The URL will contain an identifier for your sandbox identifier so the platform knows which sandbox application to open.

Clickstream data

concept Global {

match '*'

val url = `window.location.href`

flow => console

plugin script `console.log( 'Hello world' )`

}

The code in the example to the right does the following:

- It defines a concept called “Global”

- The concept encapsulates all the code within the accolades. That code is executed if the concept is successfully matched (see below).

- The Global concept will match all URLs (‘*’). This means that the code within the Global concept is executed for all pages.

- A variable called ‘url’ is created and set with the URL of the page where the code is executed

- The code between back ticks ( `-symbol) is JavaScript that is executed in the visitor’s browser (client side)

- All ‘vals’ that are created in this way are included in the DimML pixel request as a query string parameter

- The flow language element is executed after all values for the 'vals' are collected. In this case this is used to output the collected data to the O2MC studio

- The script plugin enables client-side execution of JavaScript code placed between back ticks. This makes it easy to validate that an DimML application is running for a page

Create a new file in the O2MC I/O Studio for a website that has the SLoC installed. Provide the user token as instructed in the instructions on the O2MC I/O Studio installation. Publish the file the test environment assiociated with the user token.

Open the domain associated with the DimML file and add ?dimml={user} to the URL including the specific environment. Open the Javascript console (F12 in Chrome) to open the Developer console. This should show the result of the processing by the DimML application:

Hello World

The console in the O2MC I/O Studio should show this message:

{url=http://www.yourdomain.com/}

Note that the value for URL changes with each new page that is opened.

Content routing

Example of HTML content routing

@groovy

concept Html {

match '*.html'

plugin html `

<p>What a nice line of text</p>

<p>And another one!</p>

`

}

Apart from using a DimML application to collect data, a DimML application can also be used to provide content dynamically. To achieve this a DimML application can at run time process process data and dynamically route the user agent to different content. This results in extensive personalisation options. Even with the same URL that is used, DimML can route the request to different content. That means that a web page can have one URL implemented as content on a page, but the content can be different based on the user preference, current weather or any data source imaginable.

The first way to achieve this, is to use the HTML plugin. This plugin allows a user to define HTML code which will be executed the moment the URL is opened. The match rule of the concept can be used to define several different personalised HTML pages in one DimML application

To call the DimML application (without specific headers), the following URL has to be opened:

http://dynamic.dimml.io/content/[hash]/

The hash value of the DimML application can be found by opening the O2MC studio, right clicking on Properties and clicking Publishing API. Several properties and examples are available, the second property Hash is the one that is used for content routing.

The code for a HTML page can be seen to the right.

Content plugin example

@groovy

concept Img {

match '*.png'

flow

=> code[img = `"https://unsplash.it/200/300"`@groovy]

=> out

plugin content `img`

}

Note that opening looking at the match rule in this example, the URL http://dynamic.dimml.io/content/[hash]/page.html will show the HTML code, but http://dynamic.dimml.io/content/[hash]/page.htm will not (since the match is on “.html”).

Apart from HTML code, it is also possible to route the request to specific content. For this the content plugin is used, which requires a field as argument containing the URL to route to. The following code routes the DimML URL automatically to a picture URL, as can be seen on the example to the right.

Encrypting credentials

Distributing data often requires access to third-party systems. DimML source files are used to configure these data pipelines and can contain security sensitive information like passwords. Instead of storing these passwords in clear text, it is also possible to store an asymmetrically encrypted version of credentials that only a specific part of DimML can decrypt.

Some flow elements (e.g. 'sql') can handle encrypted credentials. It is also possible to decrypt the credentials using the 'code' flow element:

concept Global {

match '*'

@groovy

flow

=> code[password = `decryptCredentials('t1KTx3x15bnB5vqhgWlnpuGheTaTmDwIjyNm8md4DN8E7BID6DbLWwZ+d6KdB+eC9+yJA8dfJn80bvgm6bLc6qHzXARs3jimwiSHqJJlPHoN7GqABI2txtNrJ+7I1AthhQZQ+Onzo3EgFcq8FwrvQJ3X0bHa0g4Oqtxiepm3gWkpOcbQ8PMar/30KPW9Y0JVmQ0V9BC+dP8pmGpCRrDcq/jghVTTLJ/yq+t/jtC61QEg5KKM1xULSQudKWA/08Lq1RaobxFeRvNz4iuqJMnzWIZKUCW+M2KPocKLX/S4cZ/q0VSxi+tbHrd+PqhWXhqsbZjjf2+nNC2G8+piKwQ/Jw==').password()`]

=> console

}

The example above will yield 'test' as the password. The decryptCredentials function returns an object with the 'user', 'password' and 'domain' functions, that return the respective values. decryptCredentials is only available from Groovy code.

An encrypted string can easily be created using the DimML Eclipse plugin: - press the right mouse button anywhere in a dimml source file - select 'Encrypt credentials...' - specify any fields that you want to encrypt (all fields are optional) - optionally specify a flow element

Specifying a flow element will restrict decryption to that element only. This can enhance security when the specified flow element does not expose the decrypted information to the other parts of the DimML program. An example that uses this feature to access a database is given below.

concept Global {

match '*'

@groovy

flow

=> sql['select * from metrics order by stamp desc', `db`, batch = '1']

=> console

const db = `{

type: 'mysql',

credentials: 'K89hsLAQRuttB9JO4TxJ+NttzCCXFf83ccGrimtAwU8lSC/UOf9Hx8EnWxkDit/KCF7CkaFFzFXZUBUoY8kkG2FGsbG9a8DAdGM0vTaum19F2wdpPhnkuXxTsLxYVRMJ1Hb3I6TtJiiyRWim0jGHOoRT/441obBFf5dqVgVTiQDFSBNoOO/PYfgUy9dIqkfFf9FQu5cEMxe8ADu5OPjt4YZXABoiNSPTspWaFTEkBnB+6STHMmY+VlSJ+j+48xzY3XaKYdtx1qKP3sUkpt7GjbRgnWQ0ibXN9uPUIM+rRYhiyPRQ4oBlo1OVLXKzwEtjh8siioSJLkc3QH6MaWizWA==',

databaseName: 'dimml'

}`

}

The above code will fail because the password is incorrect. But the sql flow element is able to decrypt the encrypted password (it is just an incorrect password). Attempting to decrypt the above key using a 'code' flow element (as decribed earlier) will fail because the 'code' flow is not authorized to decrypt the key.

Note that encrypted credentials can optionally specify a domain/host. The sql flow can use this field to connect to a specific database server.

Algorithm specification

Encrypted credentials are stored as a URI and encrypted with the Dimml public key. The URI takes the form:

creds://user:password@domain?param1=v1¶m2=v2

dimml.js Reference

The Dimml SloC (https://cdn.dimml.io/dimml.js) loads Javascript that exposes some functions through the dimml global js object.

dimml.event(eventName, [dataObject], [scriptName])

Examples

dimml.event('adsLoaded')

dimml.event('click', {x: event.x, y: event.y})

dimml.event('click', {x: event.x, y: event.y}, 'flow')

Send event data to DimML. The eventName should match the name of an event handler (on <eventName> do <code>) in dimml code. The optional dataObject should be an object whose properties are send to DimML (in addition to the 'vals' specified in the dimml code). The optional scriptName allows setting the phase of the request to DimML directly. By default it first loads Javascript that adds code to collect the vals in the concept. By specifying 'flow' as the scriptName this step can be skipped, hence sending the data in the dataObject directly to DimML. This is especially useful when sending data in time-constrained situations like page navigation.

dimml.sha1(string)

Calculates the SHA1 hash of the specified string.

dimml.cookies.get(key)

Returns the cookie value belonging to the specified key or null if it is not found.

dimml.cookies.set(key, value, [ttl], [path], [domain], [secure])

Sets the cookie key to the specified value. ttl specifies the time to live in hours. If it is omitted a session cookie is created. The optional path and domain are used to store the cookie at a specific location. A secure cookie is only transmitted over HTTPS.

dimml.cookies.unset(key, path, domain)

Removes the specified cookie.

dimml.log, dimml.debug, dimml.info, dimml.warn, dimml.error

In most modern browsers these functions will be bound to the Javascript console object. In older browsers they fall back to legacy mechanisms to display debug information in the console.

dimml.load(url, callback)

Loads an external Javascript file. The callback function is executed when the load is complete.

dimml.reload()

Restarts the matching process and executes concepts again.

dimml.safe(fun)

Executes the function in a 'safe' environment. All exceptions are logged instead of thrown.

dimml.ready(callback)

Executes the callback when the DOM is fully loaded.

new dimml.uri(uri)

Examples

new dimml.uri(location.href).query('dimml')

new dimml.uri(uri).path()

var uri = new dimml.uri(location.href);

var path = uri.path();

var queryObject = uri.query();

var dimmlEnv = queryObject.dimml;

Creates a URI object that exposes various functions to retrieve properly decoded URI parts: protocol, username, password, host, port, path, query, fragment. Most functions return a string. The query function returns an object with all query parameters. Alternatively you can specify a specific parameter to return.

Language guide

concept

The concept statement is used to declare a concept and optionally inherit definitions from other concepts. Concepts are used to group logic and perform a specific task defined in DimML code. For each execution, only 1 concept is active and executed (apart from extended concepts). To identify which concept to use, a match statement is used.

In defining data collection logic for a website, it is often the case that some part of the collection process is reused in different places. DimML offers the concept of ‘extending’ or ‘inheriting’ logic to support this reuse.

Example of extending/inheriting logic

concept A extends B, C {

...

}

As the example to the right demonstrates, a concept can extend more than one other concept. To do so, separate the concepts using a comma (,). When a concept A extends B, all of B’s values, flows and plugins are available to concept A as if they were declared in A. When A and B both define a value or plugin with the same name, the object in concept A is used. The objects in A ‘overwrite’ the objects in B.

If for instance concepts B and C are defined in a functions DimML file, the code would look like this

Example of extending a concept located in a different path

import 'lib/functions.dimml'

concept A extends 'lib/B', 'lib/C' {

...

}

const

Constants are defined similarly to fields defined with the val statement. However constants are typically for internal use: they can be used in connection details or similar information, but they are not a part of the data tuple. Furthermore as expected from constants: the value cannot be changed throughout the execution of the DimML file.

Const example

concept Global {

match `once`@service

const CPT = 'Global'

def a = `"The current concept is ${CPT}"`@groovy

def b = `CPT`@groovy

flow

=> code[ a = `a()`, b = `b()` ]

=> json

=> console

}

}

// results in the output, note that 'CPT' is not in

{"a":"the current concept is Global","b":"Global"}

Predefined constants

Every DimML application contains the constant _DEV. This constant is a boolean indicating if the application is running in a test environment (any environment that is not production) or not. This is especially usefull for writing a DimML application which for running in test or in production should take different actions. For instance the test DimML application should get files from the test folder, while the production application should use prod. The logic of this example can be combined in the example to the right.

_DEV example

`_DEV ? 'test' : 'prod'`@groovy

def

def function definition example with cookie values

val sessionId = `getCookie('session')`

def getCookie = {name => `

var i,x,y,cookies = document.cookie.split(";");

for (i=0; i<cookies.length; i++) {

x = cookies[i].substr(0,cookies[i].indexOf("="));

y = cookies[i].substr(cookies[i].indexOf("=")+1);

x = x.replace(/^\s+|\s+$/g,"");

if (x == name) { return unescape(y); }

}

return false;

`}

To define a function the DimML language uses the def keyword. DimML functions can be written in both client and server side code. They can be called from value collection, flows and other functions. Functions can be inherited and overwritten using concept merging and global scope

Example:

def getQuery = `location.search`

This defines a client side function named getQuery that returns the query portion of the current URI. Writing a function definition on a single line will be interpreted as an expression. A multi-line function definition will expect a function body (e.g. in Javascript this should contain a return statement). You can use the client side function when collecting values:

val query = `getQuery()`

By default code snippets (and therefore also function definitions) are defined in Javascript. To define and execute server side functions, use the @groovy statements:

def getName = `return 'John Doe'`@groovy

val query = `getQuery()`@groovy

For defining a function that takes parameters, the => notation is used. Multiple parameters are separated by a comma. Ergo:

If we have the function

def myFunction = { myArgument, myPunctuation => `return "Hello " + myArgument + myPunctation`}

And we call it like this:

val ubiquitous = `myFunction('world', '!')`

then ubiquitous will now contain the string

Hello world!

Client-side functions

plugin script `

console.log('the session cookie is: '+getCookie('session'));

`

Example of using a server side function inside a flow

val url = `location`

flow

=> select[`url.size() > 200`@groovy]

=> code[query = `getQuery(url)`@groovy]

=> json

Client side functions can also be called from various plugins. For instance, the script plugin allows you to attach any Javascript code to a concept (this code will end up in the browser when concept is triggered). Functions are also supported there.

events

Event example

concept Global {

match '*'

event click = `window.click`

}

Acting on client-side events allows fine-grained control over data collection and processing. Handling these events in DimML consists of two parts: Declaring the event using the event statement, and defining the appropriate logic when the event occurs.

Defining an event in concept-scope will cause the client-side handler to be registered for that particular concept only (only if also an on event statement is defined, otherwise nothing is done).

Event in global scope

Event defined in global scope

event click = `window.click`

concept Global {

match '*'

...

}

An event can also be defined in global scope. This will associate the event with every concept in scope:

Event expressions

Event example: show link url in the javascript console when hovering mouse over a link

event overLink = `document.getElementsByTagName('a').mouseover`

=> `{destination:it.target}`

concept Global {

match '*'

on overLink include {

flow => debug

}

plugin debug

}

Event expressions consist of two parts. The part before the last dot (.) is the selector and after the dot is the event name. The selector defines an element on the page. The event name defines at what interaction on the element a DimML event should be triggered. In the example above the selector is ‘window’ and the event name ‘click’. Some more examples:

event buttonClick = document.getElementById('button').click

event overLink = document.getElementsByTagName('a').mouseover

event exitLink = $('a.ad').click

The selector can be a regular HTML DOM element, a list of DOM elements or a jQuery selector.

Furthermore an event definition can be extended with a function. The event will then only trigger when the expression evaluates to ‘Javascript truthy‘. Inside the function, the element that triggered the event can be accessed using ‘it‘ as a reference.

on … do / include

Event with on...do

event overLink = `document.getElementsByTagName('a').mouseover`

=> `{destination:it.target}``

concept Global {

match '*'

val main = 'true'

on overLink do CollectLink

}

concept CollectLink {

val link = 'true'

flow => console

}

Results in : {link=true, destination=http://www.dimml.io/downloads.html}. While using the exact same code and only changing do CollectLink to include CollectLink will result in

{main=true, link=true, destination=http://www.dimml.io/downloads.html}

As shown in the last example, the ‘on‘ keyword is used to specify an action when a DimML event occurs. When ‘include‘ follows the name of the event, any logic defined in the enclosing concept is used as well. Alternatively ‘do‘ can be used instead of ‘include‘, which omits the details of the parent concept. An example of this can be seen to the right.

flow

Flows are used to declare data processing and export steps as a directed graph. Flows can only be used inside a concept. As input for the flows the values for all the variables (vals) are available.

Flows statements can also contain a name between brackets. The name between brackets identifies the bucket that functions as the source of data. The ‘default‘ bucket is a special bucket that contains the data collected through val definitions. If no bucket is specified the ‘default‘ bucket is assumed (as you can see in the first flow example). Furthermore you can point from any flow to any other flow and multiple flows. As a results DimML allows you to quickly create multiple data streams. In the example below you can see how the previously defined flow results in 2 additional flows

Example of multiple data flows

concept all {

match '*'

val pagename = 'home'

flow

=> code[views = '1'] (debug)

=> json (ftp)

flow (debug)

=> console

flow (ftp)

=> ftp[

host = 'localhost',

user = 'user',

password = '123456789',

dir = '/tmp/upload',

flush = 'true'

]

}

It is also possible to send the output of a flow to multiple destinations (with different flow names). For instance the DimML code below will format the data tuple and continue with two different flows.

flow => json (goto-1) (goto-2)

This notation is particularly usefull for the if flow element.

Parameters

Several flow elements may need parameters. These parameters contain string, regular expressions or code snippets. They should be separated with a comma (,) and can optionally be named. Note that only server side code is allowed because the flows are executed server side. By default server-side Javascript is used for the code snippets in the flow elements. If you want to use groovy, you can add @groovy after the last backtick. Parameters are placed within square brackets.

Example of flow with parameters

concept all {

match '*'

val pagename = 'home'

flow

=> select[`typeof(pagename) != 'undefined']

=> code[views = '1', pagenamelength = `pagename.size()`@groovy]

=> filter['pagename','pagenamelength','views']

=> csv[mode = '1']

=> console

}

// results in the following code in the console:

home;4;1

import

The import statement is used to import other DimML files. DimML borrows ideas from aspect-oriented programming to support the separation of these aspects into distinct DimML files.

Whenever the DimML platform receives a request from a webpage, an attempt is made to discover DimML files that are relevant to the page. The default behavior is to use the domain of the request URL to create a list of relevant DimML files. For instance, assuming the domain is www.example.com the list would be:

- example.com.dimml

- .www.example.com.dimml

- .example.com.dimml

- .com.dimml

Any missing files are skipped. The remaining files can include import directives that will add additional DimML files to the end of the list like this:

import 'customer/settings.dimml'

The DimML platform loads and merges the files in the order specified.

Each DimML file is identified by an absolute URI.

Imports refer to other DimML files using relative or absolute URIs. If the previous import statement is a part of /prod/www.example.com.dimml, the DimML file that will also be loaded as a result of the import is /prod/customer/settings.dimml.

Imports can only be done on a global level (not within a concept). How the content of the different DimML files is merged is described in the concept section.

language

A big part of the DimML language is the use of code snippets written in non DimML code. The snippets are enclosed by backticks. DimML can interpret these snippets written in different programming languages, currently Groovy, client side Javascript and server side Javascript. The default language differs by language element:

client side javascript:

- val

- const

- def

server side groovy:

- flow elements

- plugins

This means that

val url = `document.location.href`

will run in client side javascript. While

flow => code [page=`url`]

will run in groovy.

The default language can be overwritten for a specific code snippets by adding either @groovy, @javascript or @dimml for Groovy, client side Javascript and server side Javascript respectively after the closing backtick of the code snippet.

@groovy

def fun1 = `...` // groovy

concept A {

val v1 = `...` // groovy

@javascript

val v2 = `...` // javascript

def fun2 = `...` // javascript

}

def fun3 = `...` // groovy

Note that not all overwrites are possible; if the execution takes place on the server for instance, client side scripting is no longer possible.

For example

val url = `getURL()`@groovy

will define a val which gets its value using Groovy exection.

The default language can also be changed between statements in a dimml file or concept. The new default language is set from that point forward until the scope ends. The code to the right illustrates that.

match

URL matching

Matching page URLs to a concept can be done using a combination of various match instructions. They are combined using an or operator. The asterisk (*) wildcard is used for any number of characters (similar to the file system wildcard).

Example of a simple match using wildcards.

concept A {

match '*/index.html'

match '*/welcome.html'

}

A match statement is first split into its URI components: scheme, authority, path and query. For example:

match 'http://example.com/products/books?size=large'

The components will be:

- scheme: http`

- authority: example.com`

- path: /products/books

- query: ?size=large

The interpretation is that a specific URI will match this rule if and only if:

- it’s scheme equals ‘http’

- it’s authority equals ‘example.com’

- it’s path equals ‘/products/books’

- it’s query string contains at least one ‘size’ parameter with value ‘large’

Relative URIs are also allowed (eg. /products/books, //example.com, ?size=large, etc.). When a relative URI is used in the match expression, matching will only occur on the components that are present. For example:

match '/products/books'

will match all of

https://example.com/products/books#ch1http://audacis.org/products/books?user=davidblabla://david:password@blabla/products/books

Matching is done by traversing each of the file and checking each concept and match statement. This is done in order of loading and importing the files and the sequence top to bottom of the concepts. If a match can be made, the concept is chosen as the one use for the specific request.

Query matching

Matching on the query component of a URI works differently. The query component of a match expression is split into its parameters. The interpretation is that these parameters should be present in the target URI to match, but not necessarily in the same order. When the value is omitted, the parameter is only tested for its presence and it can have any value. For example:

match '?size=large&user'

will first be split in its URI components (only a query component) and then into its query parameters:

size = largeuser =

This will match

http://example.com/products/books?user=david&size=large

It will not match

http://example.com/index?size=small&user=david

Service matching

DimML applications are typically consumers: they act when called upon (e.g. when a page is opened). However it is also possible to start a data stream from initiated by DimML itself. These are called DimML services. The use of services (producer) requires 3 changes to a consumer DimML application:

- The DimML file is not written for a specific domain, so any name can be used

- The extension should be changed to .service (in stead of .dimml)

- The match rule should include the schedule at which the DimML file will execute

Example service matching with cron

concept CheckDB {

match `0 0/5 * * * ?`@cron

flow

=> sql['...', `DB`, batch = '1']

=> select[`field < 0`@groovy]

=> ...

=> mail[`MAIL_OPTIONS`]

}

The DimML application to the right will run (once) every 5 minutes, perform a look up into the database and send an email if a certain condition is met. As you can see, the match statement is written as code snippet (between backticks). To avoid the interpretation of this code snippet as Javascript, the @cron statement is added. This is the only correct use of @cron.

Example of an extensive schedule

concept CheckDB {

match `0 30 10-13 ? * WED,FRI`@cron

flow

=> ..

}

This will trigger a DimML service at 10:30, 11:30, 12:30, and 13:30, on every Wednesday and Friday.

By default all services have a limited lifetime (to prevent forgotten services that run perpetually). Services defined in the sandbox have a lifetime of 1 hour, development services have a lifetime of 1 day and production services have a lifetime of 1 year.

A special schedule is the ‘producer’ schedule:

match `keepalive`@cron

This schedule sends a pulse every few minutes to the first flow element in the default flow(s). It is used to keep ‘producer’ flows alive. A producer flow element does not require input data but is allowed to emit data down the flow whenever it is available. An example is the twitter flow

Prematching

Example $preMatch: Wait for 1 second before matching

def $preMatch = {doMatch => `

setTimeout(doMatch,1000);

`}

Example $prematch : Wait (polling) for the availability of some javascript library

def $preMatch = {doMatch => `

var handle = setInterval(function(){

if (window.jQuery) {

clearInterval(handle);

doMatch();

}

},100);

`}

Example $prematch: Wait for the availability of some javascript you can edit

def $preMatch = {doMatch => `

if (window.myLib) {

doMatch();

} else {

window.dimml.doMatch = doMatch;

}

`}

In the other javascript lib:

if (window.dimml && window.dimml.doMatch) window.dimml.doMatch();

Example: Match multiple times to select a concept based on client-side events

def $preMatch = {doMatch => `

var handle = setInterval(function(){

if (!window.s_c_il || !window.s_c_il[0] || !window.s_c_il[0].t)

return;

var s = window.s_c_il[0];

s.tttt = s.t;

s.t = function(){s.tttt();doMatch();}

clearInterval(handle);

doMatch();

},250);

`}

The special function $preMatch allows interaction with the client-side matching process. It can only be defined within the global scope of a DimML file; it has no effect when defined inside a concept. The signature of the function definition is:

def $preMatch = {doMatch => `...`}

Note that as in other DimML elements backticks (`) are used for code snippets. By defaults the code snippets contain Javascript but other languages can be used as well (see val statement for more explanations). Prematching can only be done using default Javascript code snippets.

Control over when the matching process (for this particular DimML file) should start and select a concept is relinquished to the $preMatch function. The doMatch parameter is a callback function that should be executed when the matching process should select a concept. Some examples can be found to the right.

val

The val statement declares the collection of values (either client or server-side). The val statement is only valid inside a concept. Each val statement is executed in parallel with the other val statements; no sequence is defined.

val type = 'example'

A val statement can also be assigned code, by means of backticks. These backticks are used for other DimML elements as well (def, plugins, etc.). The backtick can be extended with an @ to define which programming language the code to use for parsing and executing. By default client-side Javascript is used. Therefore

val url = `document.location.href`

is the same as

val url = `document.location.href`@javascript

Furthermore you can call functions (see def statement) which are defined in the DimML file as well. If for example you have defined a function in groovy to define the page name, the (server-side) val statement would look like this

val pagename = `getPageName()`@groovy

version

Version example

version '2.2-adversitement'

Optional element to specify a target platform and version of the DimML code that is used. When present this should be the first element in a DimML file. Though all platform servers can send requests to all connected servers, adding a version immediately points the file to the right server.

Flow Steps

Basic Processing

addjson

Example

concept Example {

flow

=> code[j = '{"a": "foo", "b": 133}']

=> addjson['j']

}

Result (given we start with {a:"foo", b: 133} as object):

{ j: {"a": "foo", "b": 133}, a: "foo", b: 133 }

Parse a JSON object string and add the elements as fields to the data tuple.

Description

Given a Field parameter, parse the field’s content in the input tuple as a JSON object string and add the key-value pairs as fields to the output tuple.

Parameters

| Name | Type | Description |

|---|---|---|

| field | identifier | JSON object string identifier |

References a field available in the input tuple.

Input

The field referenced by the Field parameter should be available in the input tuple.

Output

The output tuple contains the key-value pairs from the JSON object string as fields.

Remarks

- JSON object string should be well-formed.

- Nested objects and arrays are parsed and returned as maps and lists respectively.

aggregate

Aggregate example

concept Example {

val url = `document.location.href`

flow

=> bucket['10s']

=> aggregate['url',views='count url']

}

Keeps track of the views for each opened url for a duration 10 seconds per url.

Run a single aggregation step on the collection of input tuples.

Description

Aggregate takes a set of tuples as input and aggregate the results into 1 tuple. Additionally add new fields which use the functions count, sum or avg on any of the existing fields

Parameters

| Name | Type | Description |

|---|---|---|

| fields | List of fields | The fields for which the data is grouped |

References fields available in the input tuple or extension on top of them

Input

The fields referenced by the List of Fields parameter should be available in the input tuple. Futhermore new fields can be defined which are the result of function on top of the existing fields

Output

The output tuple for each unique occurrence of the values in the List of Fields

Bucket

Bucket example

concept Example {

val url = `document.location.href`

flow

=> bucket['10s']

=> aggregate['url',views='count url']

}

This will collect the url for each page opened and aggregate the data based on that value. After 10 seconds for each unique url a tuple will be generated which will also contain the views field. This field contains the number of times the url has occurred.

Convert a stream of tuples that arrive in a predefined time slot to a single collection of tuples.

Description

Convert a stream of tuples that arrive in a predefined time slot to a single collection of tuples. Guaranties the timely delivery of the output collection if at least a single tuple has arrived in the time slot.

Parameters

time – Time slot for collecting tupless

The postfix time notation uses ‘ms’ for milliseconds, ‘s’ for seconds, ‘m’ for minutes, ‘h’ for hours and ‘d’ for days.

Input

No specific requirements

Output

All tuples of the input stream

buffer

Buffer example

concept Example {

val url = `document.location.href`

flow

=> buffer['10s']

=> aggregate['url',views='count url']

}

This will collect the url for each page opened and aggregate the data based on that value. After 10 seconds for each unique url a tuple will be generated which will also contain the views field. This field contains the number of times the url has occurred.

Convert a stream of tuples that arrive in a predefined time slot to a single collection of tuples. The only guaranty is that of eventual delivery of the output collection.

Description

The buffer element takes a single unnamed parameter that defines the minimum time to buffer tuples. The buffer tries to send the data as soon as possible, but makes no guarantees. The data is send as a single list of tuples and stamped with the current time as packet time. This time can be retrieved using the time element..

Parameters

| Name | Type | Description |

|---|---|---|

| time | String | Postfix time notation for collecting tuples. The postfix time notation uses ‘ms’ for milliseconds, ‘s’ for seconds, ‘m’ for minutes, ‘h’ for hours and ‘d’ for days. |

Input

No specific requirements

Output

All tuples of the input stream

buffer:aggregate

Buffer:aggregate example

Example

concept Example {

val url = `document.location.href`

flow

=> buffer:aggregate['10s','url',views='count url']

}

This will collect the url for each page opened and aggregate the data based on that value. After 10 seconds for each unique url a tuple will be generated which will also contain the views field. This field contains the number of times the url has occurred.

Perform aggregation as a streaming step on input data that arrives in a specific time slot

Description

This element combines both the buffer and the aggregate flow elements into one element

Parameters

| Name | Type | Description |

|---|---|---|

| time | String | String with postfix time notation for the time slow |

| fields | list of fields | the fields for which the data is grouped |

The postfix time notation uses ‘ms’ for milliseconds, ‘s’ for seconds, ‘m’ for minutes, ‘h’ for hours and ‘d’ for days. References fields available in the input tuple or extension on top of them

Input

The fields referenced by the List of Fields parameter should be available in the input tuple. Futhermore new fields can be defined which are the result of function on top of the existing fields

Output

The output tuple for each unique occurrence of the values in the List of Fields

buffer:session

Buffer:session example

concept Global {

match '*'

def guid = `dimml.sha1(+new Date()+Math.random().toString(36).slice(2)+navigator.userAgent).slice(20)`

val url = `location`

val sessionId = `sessionStorage.dimmlsid=sessionStorage.dimmlsid||guid()`

@groovy

flow

=> buffer:session['sessionId', timeout = '30s', `

session.startPage = session.startPage?:url

session.endPage = url

session.pageCount = (session.pageCount?:0)+1

false`]

=> console

}

This will collect the url for each page opened and the data based on the session ID. After 30 seconds for each unique url a tuple will be generated which will execute the code defined in the body. Since the startpage is stored only for the first tuple (and unchanged after that), it contains the first page of the visit. Similarly since the endPage parameter is updated for each new tuple, it will contain the URL of the last page of the visit. Finally the pagecount parameter is incremented with each page view. Since false is added to the body, no input tuples will be send to the next code element. Only 1 tuple will be send to the next code element, containing all session parameters. If for this DimML application first the URL http://documentation.dimml.io is opened followed by http://documentation.dimml.io/basic-syntax, the output tuple will be

{startPage=http://documentation.dimml.io, endPage=http://documentation.dimml.io/basic-syntax, pageCount=2}

Collect input tuples until a user session ends.

Description

This element buffers input tuples them using a session id. Apart from the session id, a time out can be defined after which the session has ended. The body of this code element can be used to perform additional calculation on the input tuples such as storing data in the session object. The body is executed at the moment the input tuple reach the code element. If the body returns false, the input tuple is discard. This is particularly convenient for calculting properties of a session both only storing them at the end of the session when the session expiration took place. All fields of the session object will be available as fields in the resulting flow

Parameters

| Name | Type | Description |

|---|---|---|

| time | String | String with postfix time notation for the time slow |

| sessionId | String | Value which is used to aggregate the data |

The postfix time notation uses ‘ms’ for milliseconds, ‘s’ for seconds, ‘m’ for minutes, ‘h’ for hours and ‘d’ for days. The session id refers

Input

The fields referenced by the List of Fields parameter should be available in the input tuple.

Output

Based on the use of false, a number of input tuple after the expiration as defined as field. Furthermore additional fields might be available

call (improve)

Call example

concept Example {

match '*'

val a = `3.0`@groovy

val b = `4.0`@groovy

flow

=> call[`Functions:pythagoras`@dimml]

=> debug

plugin debug

}

concept Functions {

flow (pythagoras)

=> code[`Math.sqrt(a.power(2) + b.power(2))`@groovy]

}

This will result in the following tuples:

{b=4.0, a=3.0, c=5.0}

Execute a specified flow on the input tuple

Description

Send the input tuple to a target flow and forward the resulting output. The target flow can be defined in a separate concept, possibly in a separate file. This effectively sends the data to a flow in another concept, executes that concept, and returns the result.

Parameters

target (code) – Description of the concept and flow to be executed

Input

No specific requirements

Output

The output of the called flow given the current input tuple. Example

The called target flow must be available, and thus be included somewhere if it is defined in another file. Calls to the target flow share the same execution concept. Specifically, this means that calls to the same target flow from different places can be combined. The call flow elements can be used to simulate function calls. This means the function flows can be included in arbitrary flows, so the content of the input tuple is uncertain and performing desired checks is recommended. For example, in the pythagoras flow you might want to verify that both a and b are present and numeric.

catch (stub)

code

Code example

concept Example {

match '*'

val browser = 'Mozilla/5.0 (Windows NT) Firefox/36.0'

flow

=> code[

ie = `ua.contains('MSIE')`,

firefox = `ua.indexOf('Firefox')>=0`@groovy

]

}

This will result in the following tuple:

{ browser: Mozilla/5.0 (Windows NT) Firefox/36.0, ie: false, firefox: true }

Add values to the data tuple based on specified script code.

Description

Define new fields based on client side or server side code.

Parameters

List of assignments – Scripts to define a value for a field

Each assignment contains an expression which is assigned to a field. This script can either be in Javascript (default, executed client side) or Groovy (executed server side). For the later, add @groovy after the script text.

Input

No specific requirements

Output

The data tuple is extended each field defined in de code element

compact

Compact example

concept all {

match '*'

val firstName = 'John'

val lastName = 'Doe'

flow

=> compact['map']

=> console

}

This will result in the debug plugin showing the following text in the console: {lastName=Doe, firstName=John, map={lastName=Doe, firstName=John}}

Add a new field to the data tuple that contains a map of the current fields as value.

Description

Add a field called ‘compacted’ to the tuple that contains a (unmodifiable) map view of the tuple. The default field name can be overridden

Parameters

Field – Optional: field which the contains map

Input

No specific requirements

Output

An additional field with a map as specificed

console

Console example

concept Hello {

flow

=> code [message = `'Hello'`]

=> console

}

Returns the following in the console:

{ message: Hello }

Description

Outputs the current working set of tuples into the connected console.

Parameters

None

Input

None

Output

Overview of current data.

csv

Convert the data tuple to a CSV representation.

Description

Csv example

concept Example {

match '*'

val firstName = 'John'

val lastName = 'Doe'

flow

=> filter['firstName','lastName']

=> csv[mode='1']

=> debug

plugin debug

}

This will result in the debug plugin showing the following text in the console:

John;Doe

Convert the data tuple to a CSV representation.

Parameters

Mode – field seperator

An alternative mode of operation can be selected using the mode=’1′ parameter. This will use the semicolon (;) as separator, no double quotes to escape data, escape any semicolon in the data (using \;)

Input

No specific requirements

Output

Csv example 2

concept Example {

match '*'

val firstName = 'John;the one and only'

val lastName = 'Doe'

flow

=> filter['firstName','lastName']

// the value of all fields are put in the serialized field,

// and then every occurence of the the semicolon is replaced

=> compact['serialized'] => code[serialized = `serialized.values()*.replace(';','').join(';')`]

=> debug

plugin debug

}

This element will output a new tuple with a single field called ‘serialized’. This field is of type String and contains the CSV formatted data of the input fields. Note that all output flow elements use this serialized field by default as input. The delimiter is a comma (,). All data is escaped using double quotes.

Note that there is no predefined sequence in which the values are shown. Should this be required, use the filter element

Additionally it is necessary that the separator that is used does not occur in the values for the fields. Since any use of the files and separating the columns will result in a different amount of identified values. E.g. in the first example the firstName field could contain a comma (e.g. John,the one and only). The output would then be

John;the one and only;Doe

Processing this data would cause challenges since the additional comma results in 3 fields being identified (or the one and only as last name). Therefore it is advised to filter out any occurrences of the separator in the field values. The code in example 2 does that for the semicolon.

debug

Debug example

concept Example {

match '*'

val firstName = 'John'

val lastName = 'Doe'

flow

=> filter['firstName','lastName']

=> csv

=> debug

}

Send the data tuple to the Javascript console.

Description

Data will pass through the ‘debug’ element unaltered. This allows the use of debug anywhere in a flow to get a peek of the data passing through. This element should be combined with the ‘debug’ plugin to make its output visible in the Javascript console.

Parameters

None

Input

Uses the ‘serialized’ field which contains a string to be shown

Output

No changes to the data tuple

delay

Delay example

concept Example {

match '*'

val a = 'a'

flow

=> delay['30s']

=> debug

plugin debug

}

Output after 30 seconds in the console:

{a=a}

Delay each input tuple for a specified amount of time

Description

For each input tuple, wait for the amount of time specified by the delay parameter before outputting it.

Parameters

delay (string) – Amount of time tuples are delayed

Input

No additional requirements

Output

No changes to the data tuple, though the flow is continued later (the delay)

drop

Drops a tuple from the flow, effectively ending that flow without returning anything.

flow

=> code[test = `"Hello"`]

=> console

=> drop

=> console // will not get executed

expand

Expand example

concept all {

match '*'

flow

=> code[map= `["a":1,"b":1]`@groovy]

=> expand['map']

=> debug

plugin debug

}

This will result in the debug plugin showing the following text in the console:

{map={a=1, b=1}, a=1, b=1}

Interpret the data tuple as a map and include its fields in the current data tuple.

Description

Interpret the data tuple as a map and include its fields in the current data tuple.

Parameters

Field – Optional: field which contains the fields as a map

Input

If no field is defined, the field ‘expanded’ will be use. The Field that is used has to be of type map. Therefore it is the map alternative to addjson, which uses a string as input field

Output

Each item in the map is added as a field to the data tuple.

filter

Filter example

concept Farm {

flow

=> code [`[ 'duck', 'dog', 'cat', 'apple' ]`]

=> nop (duckdog) (removefruit)

flow (duckdog)

=> filter [ 'duck', 'dog' ]

=> console

flow (removefruit)

=> filter [ '-apple' ]

=> console

}

> Result:

{[ duck, dog ]}

{[ duck, dog, cat ]}

Description

As the name suggests, filter applies a filter on the given tuples. A filter can only be inclusive ['dog'] or exclusive ['-apple'] in one call, but may have multiple tuple filters at the same time.

So ['dog', 'cat'] is a valid filter. ['-cat', '-apple'] is as well. But ['dog', '-cat'] is not.

Parameters

| Name | Type | Description |

|---|---|---|

| names | List of Strings | A list of names of keys which should be filtered |

Input

All current tuples

Output

Depending on the used filter, either:

- Only the tuples defined in the

filterparameters

Or

- All the tuples, except for the one defined in the

filterparameters

for .. next

Example for .. next

=> for[i = `0..5`@groovy]

=> console

=> next

This will output tuples {i=0}, {i=1}, {i=2}, {i=3}, {i=4} and {i=5} to the console.

Loop over a bounded collection.

Description

The for and next steps enable looping over a bounded collection. The collection or array is specified in the for step as a single parameter where the field name is the bound variable and the code block returns the collection to loop over.

For loops have a long tradition in imperative programming languages. Since tuples contain all the state required to execute the next steps, the runtime properties of a for loop are different than those found in other languages. For one, the execution of the next step is optional. The for step only sets up the required state for a next step to know what to do.

No state in for loop runs

=> code[a = `1`]

=> for[i = `5..7`@groovy]

=> code[a = `a+i`]

=> console

=> next

=> console

This will output tuples {a=6, i=5}, {a=7, i=6}, {a=8, i=7}, {a=1}

The next step looks up the last registered for loop, advances the index in the collection and the flow executes as if the for step was the previous step. By default there is no state available from the previous loop run. When the collection used in the for loop has been completely exhausted the next step will resume normal execution using the tuple state from before the for step.

To keep state in loop runs, replacing next in previous example:

=> next[`[a:a]`]

This will output tuples {a=6, i=5}, {a=12, i=6}, {a=19, i=7}, {a=19}.

To keep state in between loop runs, you specify the context to carry over as a code block in next step. This code block should return a map/object and the next step will make it available as tuple fields in the next loop run or the termination of the loop.

Akin to a break statement in imperative loops you can prematurely terminate a loop by setting a 'next' field to false in the context that you carry over in the next step.

Set next to false when you want to terminate the loop

=> next[`[a:a, next: a<10]`]

This will output tuples {a=6, i=5}, {a=12, i=6}, {a=12}.

Nesting for loops is also possible. There is nothing special about the second next step in nested for loops. The reason why it operates on the 'outer' loop is that after the first next terminates the resulting tuple is based on the tuple as it arrived at the second for step. It no longer contains information about the second loop.

Nested loops

=> for[i = `0..2`@groovy]

=> for[j = `0..2`@groovy]

=> console

=> next

=> next

This will output tuples {j=0, i=0}, {j=1, i=0}, {j=2, i=0}, {j=0, i=1}, {j=1, i=1}, {j=2, i=1}, {j=0, i=2}, {j=1, i=2}, {j=2, i=2}.

Since there is nothing special about the second next step, there is no way to 'jump' out of the inner loop and skip to the next element in the outer loop (aside from using the 'next' field in the context as described above and terminating the inner loop). You can optionally specify the bound variable, of a for step, in the next step by name. The second next step would be next['i'], referring to the bound variable of the outer loop.

Nested loop with bound variable

=> for[i = `0..2`@groovy]

=> for[j = `0..2`@groovy]

=> console

=> if[`j>Math.random()*2`] (next_i)

=> next

=> (next_i) next['i']

Random output like {j=0, i=0}, {j=0, i=1}, {j=1, i=1}, {j=0, i=2}, {j=1, i=2}

Parameters for

| Name | Type | Description |

|---|---|---|

| [varname] | codeblock | Set a bound variable iteratively to the elements of a collection or array that is the result of evaluating the code block |

Output for

The input tuple with the bounded variable field set to the current value in the collection of the for loop.

Parameters next

| Name | Type | Description |

|---|---|---|

| <anonymous> | codeblock | Returns a map/object containing context variables that should be carried over between for loops and after termination of the loop |

| <anonymous> | string | The name of a bound variable used in identifying which for loop this next step should act upon |

Output next

The result after termination of the loop, either because the collection has been exhausted or because the 'next' field in the context map/object was true.

if

If example

flow

=> if[`beer == 'Heineken'`] (no-heineken)

=> ftp[..]

flow (no-heineken)

=> sql[..]

The expression in the if flow element in this example evaluated whether the beer parameter is set to a specific value. If this is the case, the flow is continued as defined (an no other flows are initiated). In this case the FTP element is executed. If the expression is not true, the no-heineken flow is initiated and the current flow stops (nothing is sent by FTP). With all of the current data in the data tuple the SQL element is executed.

Continue in the current data stream only if a condition is met.

Description

The if flow evaluates an expression and continues with the flow if the expression evaluates to true. If the evaluation evaluates to false, all named flows provided with the if flow element are executed.

Output

The current flow is continued or stopped based on the evaluation of the expression

ip

Ip Example

concept Example {

match '*'

flow

=> ip

=> debug

plugin debug

}

Output:

{ip=81.30.41.185}

Add the visitor’s IP address to the data tuple.

Description

The ip flow adds the IP address attached to the current tuple’s original HTTP request as a field named ip to the data tuple. Note that some flow elements might make it impossible to access the original request, notably aggregation flows and the join flow.

Output

ip(string) – The IP address of the current tuple’s original HTTP request.

Remarks

Some flow elements might make it impossible to access the original request, notably aggregation flows and the join flow.

join

Joins all tuples into a single tuple, containing a map of all tuples.

join example

flow

=> code[test = `["foo","bar"]`]

=> split['test'] // we now have two separate tuples: {test=foo}, {test=bar}

=> join

=> console // outputs: a map containing two tuples: [ {test=foo}, {test=bar} ]

json

JSON example 1

concept Example {

match '*'

val a = 'a'

val b = `1`

flow

=> json

=> console

}

Output:

{"b":"1","a":"a"}JSON example 2

concept Example {

match '*'

val a = 'a'

val b = `1`

flow

=> json['c']

=> console

}

Convert all fields in the data tuple to a JSON representation.

Description

Takes the input tuple and converts it to JSON. No escaping is performed. By default, the output tuple contains a single field called serialized, containing the JSON representation of the input fields. It is also possible to specify a field name as a parameter, in which case that field is added to the tuple, containing the JSON representation of the input fields.

Parameters

| Name | Type | Description |

|---|---|---|

| field | string | Field name in which the JSON result will be stored |

Output

If the json is used without a parameter:

| Name | Type | Description |

|---|---|---|

| serialized | string | JSON object string of the input tuple's fields |

If the field parameter is used:

| Name | Type | Description |

|---|---|---|

| field | string | Field name in which the JSON result will be stored |

Output:

{b=1, a=a, c={"b":"1","a":"a"}}

Remarks

- No escaping is performed.

- The fields in the JSON object string are in arbitrary order

pattern

example pattern

flow

=> session['sessionid']

=> pattern[previous = 'Global', current = 'Global']

Recognize patterns of concept access and make the collected information from those concepts available.

Description

The pattern element requires the presence of a ‘session’ field in the input tuple to operate. This field should expose ajava.util.Map that can be used to store session related data on the server (see the session element). Concept access will be recorded in the session. An output tuple will only be produced when a matching pattern is discovered. The output tuple will contain all the fields of the input tuple plus any named parameters.

In the example an output tuple will be produced when two Global concept accesses occur and the last access is the current request. Since both parameters in the example are ‘named’ (namely ‘previous’ and ‘current’), these fields will be added to the output tuple. They contain a reference to the data collected for these concepts when they reached the pattern element in the past.

concept Global {

val url = `location`

val sessionid = `dimml.cookies.get('session')`

flow

=> session['sessionid']

=> pattern[previous = 'Global', current = 'Global']

=> select[`previous.url.contains('/orderform.html')`@groovy]

=> ...

}

This will collect the values ‘url’ and ‘sessionid’ on the client and start flow processing. First a ‘session’ field is added containing a server-side session based on the ‘sessionid’ field. Then pattern matching is executed to detect the occurence of two ‘Global’ concept accesses. Then only those matches are selected where the ‘previous’ access was for a page that contained ‘/orderform.html’ as part of the URL.

property (stub)

purpose (stub)

route (improve)

Route example

@groovy

concept Route {

match '*'

val data = `['A', 'B', 'c']`

flow